An Ecosystem Approach to Assignments

DreamBox’s adaptive engine delivers personalized learning experiences to students, with little to no teacher input needed. While powerful in theory, teachers struggle to trust a technology they can’t see, understand, or influence. The original Assignments feature was developed to address teacher mistrust and provide them with much needed insights and agency, but teachers reported many pain points with the experience.

In an effort to revive a product offering that held great potential for teachers and business alike, I adopted a holistic ecosystem approach to Assignments, and identified, pitched, and designed high-impact improvements and expansions.

my role – strategy and vision, UX/UI Design, UX Research

my focus – systems thinking, research-driven strategy, data reporting

the team – PM, engineering

the legacy assignments feature

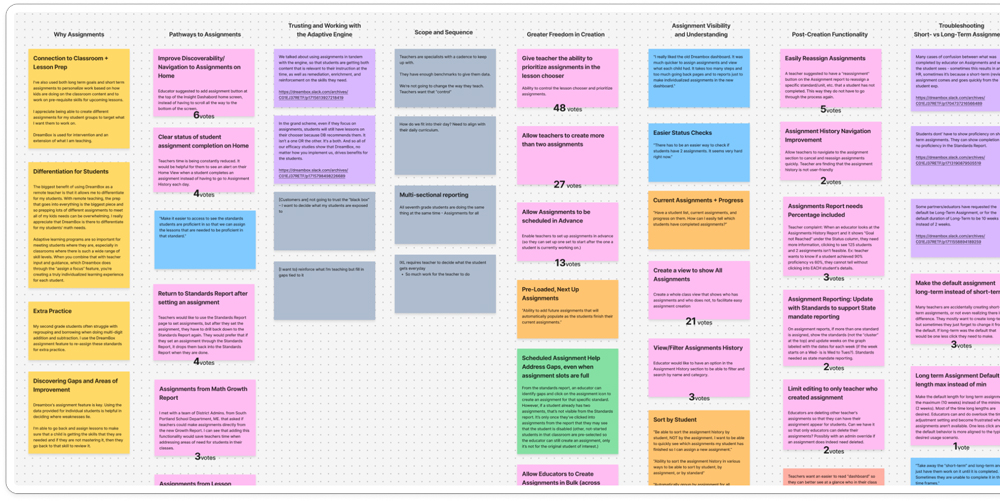

With years of user feedback and usability studies on our legacy Assignments offering, I consolidated our many feedback sources and after reviewing them, identified the most prominent pain point themes:

- Discoverability

- Activation

- Data Metrics

- Cognitive Load

a holistic view

With a limited dev window, PM initially scoped this work as two updates:

- Add Assignment flow: updating the code base, maintaining parity with legacy experience

- Assignment History report: minor updates

Having already deeply familiarized myself with Assignments pain points, I looked at and beyond the two touchpoints PM selected and explored opportunities for improvement across the broader Assignments ecosystem. That led me to an alternate solution that I then presented to PM and dev:

- Discoverability: Increase visibility by repositioning Assignments into its own space in the primary nav

- Activation: At-a-glance insights offered through an Assignments Overview landing page

- Data Metrics: Data metrics that are aligned to teachers' mental model

- Cognitive Load: Compartmentalized and streamlined assignments data within a new reporting ecosystem, expanded in-product education

refining project scope

Initially and purposefully a pie-in-the-sky take on Assignments, my proposal sparked productive stakeholder conversation around Assignments milestones both in the immediate and on the horizon. Balancing user and business goals with time and technical constraints, our product trio landed on two updates for the upcoming quarter:

- Add Assignment flow: updating the code base, with small (but mighty!) UX/UI improvements

- Assignment History report Assignment Overview: a new at-a-glance experience that complements the legacy Assignment History report

add assignment flow (shipped)

The primary goal of this work was for devs to update the underlying code, a necessary technical investment to support future enhancements. Additionally, the team was able to sprinkle in some UX/UI improvements that, while light on dev lift, would bring more clarity, usability, and alignment with teacher workflows:

- Default assignment settings aligned with the most-common and most-valued selections

- A “Help Me Choose” modal to guide assignment selection

- Proximity-based layout adjustments for clarity

- Clear disabled/error state messaging when assignment limits are met

assignment history report

assignment overview (in development)

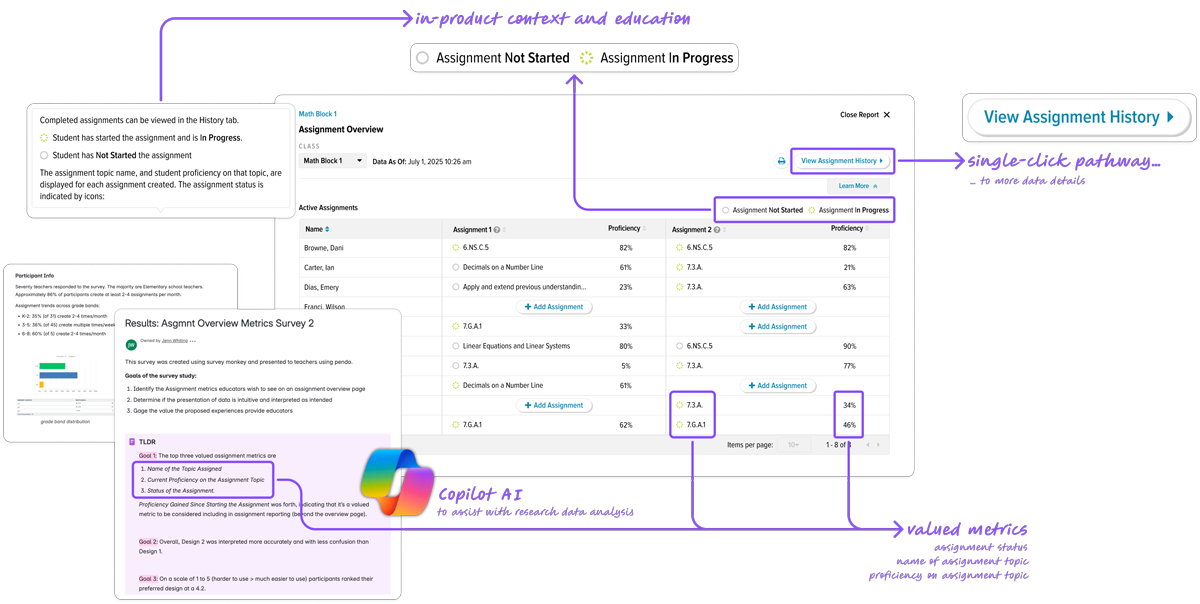

While the ecosystem solution I originally proposed didn’t fully align with our limited dev window, our product team committed to a partial implementation for the upcoming dev cycle:

- Discoverability: (out of scope for this milestone)

- Activation: At-a-glance insights offered through an Assignments Overview table

- Data Metrics: Status and proficiency metrics, vetted through two rounds of user feedback*

- Cognitive Load: Compartmentalized and streamlined assignments data between two touchpoints, expanded in-product education

*Two rounds of research validated the Overview concept and metrics to be included. With over 140 user responses, including quantitative and qualitative data, I utilized copilot to assist with data analysis.

measuring impact

Much of the work released, or will soon be, in the summer of 2025. Feedback thus far has been positive, but limited. Due to significant drops in product usage over school summer breaks, we'll assess our impact metrics in the Fall and Winter seasons ahead.

Our measures of success include the following. Stay tuned for results!

- Increased assignment creation and monitoring

- Greater teacher confidence and satisfaction

- Higher DreamBox usage and growth among students

reflections

This project reaffirmed the importance of designing for transparency, trust, and simplicity, especially with complex systems. It was also a successful practice in scope management; even with a limited dev window, a holistic and informed lens allowed us to drive high-impact improvements.